What is Monolithic Architecture?

Monolithic architecture is a style of software design in which all parts of the software are interconnected and often interdependent. While a program with a monolithic architecture may have many different features and functions, in the back end, all of them are part of a single, self-contained whole.

For decades, most software was developed this way. And the approach does have some advantages. For example, working with a monolithic architecture in the early stages of application development can be more manageable.

However, the monolithic approach also has many downsides, including:

- Since everything is interconnected, when one feature or function throws an error, it can impact many other features or crash the program entirely.

- Over time as the software is updated and expanded, the codebase can become large and difficult to manage because making changes in one function may break another function somewhere else in the code that relied on it.

- Even in the case of a minor update to a single feature, the entire application must be recompiled and tested.

Perhaps most significantly, though, scaling monolithic applications is often inefficient. They can be scaled horizontally with relative ease – add a load balancer and as many instances of the application as are required – but this approach can be overly expensive.

To understand why, imagine a cloud-based banking application that was developed with a monolithic architecture.

As the application’s user base grows and users start making more deposits, the bank adds additional instances of the application to its cloud deployment to meet the demand. But to ensure performance doesn’t degrade and impact the user experience, the bank has to scale the entire application horizontally at the pace at which its most popular feature is growing.

In other words, the bank may be seeing enough deposits and withdrawals to warrant paying cloud fees for ten instances of the application because that’s how many are needed to maintain performance. But that means it has ten instances of the entire application, including features like international wire transfer that see far less use and don’t yet need to be duplicated ten times.

Ultimately, the bank is paying for more than it actually needs because its architecture is monolithic. It can’t just scale up its deposit functions because everything is interconnected, making scaling an all-or-nothing endeavour.

Those disadvantages are why modern software development is rapidly moving towards a different approach.

What is Microservices Architecture?

Microservices architecture is a style of software design where a single application is broken into smaller components, called microservices. Often, these services can communicate with each other, but they are not interdependent. When microservices architecture is done right, making changes to one service doesn’t affect the functionality of other services. As a result, developers can move more quickly, iterating and testing individual features without constantly recompiling and re-testing the entire application.

There are also advantages in terms of scaling. If we return to the example of the banking application, switching to a microservices architecture would mean breaking the application down into separate services for things such as logging in and out, deposits, withdrawals, balance queries, transfers, and making account changes, sending money, etc.

This can make scaling up much more efficient because it allows you to scale each service in sync with demand.

If many users are making deposits, but very few are making transfers, for example, the bank could add many instances of its deposits microservice to maintain performance without also having to scale up microservices like transfers, where a single instance is enough to handle the load. Consequently, the bank may spend much less on cloud deployment.

Microservices architectures are not without drawbacks, of course.

The complex web of interactions between services can become challenging to work with as businesses scale up; some applications may have hundreds of different microservices or even more. The initial deployment of a microservices application can also be more complex than a monolithic application. However, once things are up and running, microservices deployments become much faster and simpler since developers can update and deploy new versions of a single service quite quickly in comparison to having to redeploy the entire app!

Benefits of Microservices in CMS

So, how does all of this apply to a content management system (CMS)? Well, the benefits of microservices architecture generally also apply to a CMS. For example, with a monolithic CMS, if some individual function throws an error, there’s a good chance it could break all of the content on your site (or even the entire site itself if you’re not using a headless CMS).

Another specific advantage is that a microservices CMS is typically API driven, meaning that each service communicates with the other services predictably, in accordance with each service’s API documentation and industry conventions about APIs (generally REST APIs). This can make integrating new tools and services relatively straightforward, whereas, with a monolithic application, these integrations would likely require manual coding work to ensure each new tool could “speak” to the application (and vice-versa).

Monolith DXP vs Composable DXP with Microservices

The situation is similar when considering different architectures for digital experience platforms (DXPs). A monolithic DXP aims to provide an all-in-one solution for everything related to a company’s digital experience (including all of the digital content it serves). Typically this means that it is either:

- A third-party solution that’s cumbersome and inefficient because it’s packed with tons of features to ensure it can be used for a variety of contexts and use cases/

- A customized solution that was developed internally that’s hopefully a more direct fit for your use case, but that likely took a considerable amount of time and money to develop (and that will be similarly difficult and costly to update).

Needless to say, neither of these solutions are ideal. A more modern alternative is a composable DXP – a DXP that has applied the microservices approach. Composable DXPs use a headless CMS and APIs to make integrating microservices easy, allowing the user to create a customized DXP that offers all the features they need and none of the features they don’t.

In some cases, composable DXPs can even come with pre-built integrations, making it even simpler to integrate the CMS with popular back-end and front-end tools.

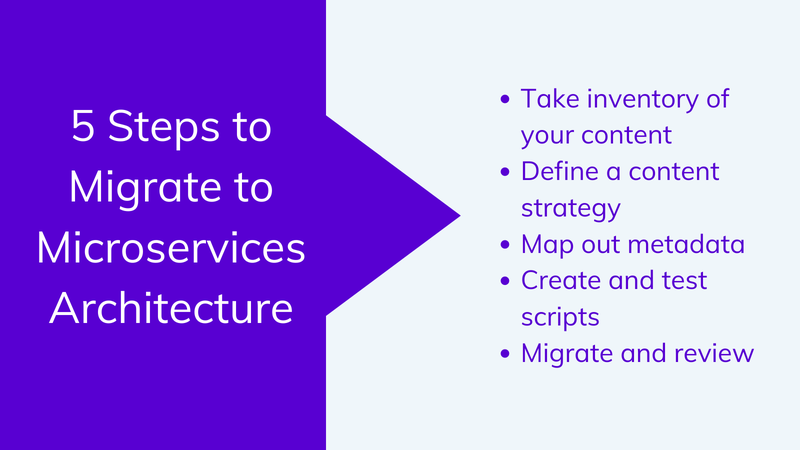

5 Steps to Migrate to Microservices Architecture

Adopting a microservices architecture for your CMS comes with a lot of tangible benefits, but unless you’re building a new system from scratch, it also means migrating everything that’s in your current CMS into something new and different, which can be a daunting task.

It doesn’t have to be tricky, though! If you approach the migration process with care and take the appropriate preparatory steps, you can minimize the challenges and the time required to move between systems.

Take inventory of your content

The first step is to conduct a careful inventory of what you already have. This might include blog posts, images, videos, guides, and more, but don’t forget about some of the less visible elements of your content, such as metadata.

You’ll want to pull or create spreadsheets for each type of content to catalogue what you have, where it’s stored, and where it will live in the new system. This will allow you to formulate plans for moving each type of content and (hopefully) ensure that you don’t miss anything during the migration process.

Many content management and asset management systems can export spreadsheets, which makes this step a breeze. If yours does not, and you’re moving large amounts of content, you may need to write custom scripts to complete this step. You can put the spreadsheets together manually if you don’t have too much content to cover, but be extra careful when taking this approach, as it makes it even easier to miss something by accident.

Define a content strategy

This step is where the rubber really starts to meet the road. First, you’ll need to assess the lists you created in step one to determine which content you want to bring over to the new system and which content (if any) you’re OK with losing. This typically means assessing your broader company content strategy and doing a deep dive into your content analytics to ensure that you’re planning to bring over all of your most valuable content.

Once you know what you’re bringing, create a plan for how it will be migrated to the new system. If you have a small amount of content, you may opt to go the manual copy-paste route, but generally speaking, the easiest way to migrate will be to develop custom scripts that pull your content from the old system and insert it into the new system.

You don’t need to create those scripts during this step, but you need to determine what will be required so that your engineering or development team can scope the work and begin planning.

There are also pre-made migration tools that, depending on the systems that you’re migrating to and from, may be able to accomplish what you need without requiring any custom coding. You should look into those options during this step.

Map out metadata

Depending on the system you’re migrating from and the system you’re migrating to, there’s a good chance you’ll want to spend some time mapping out your plans for metadata on the new system. This step is similar to the process of DataOps - where it’s important to have templatized and reproducible labels. Having all content thoroughly tagged and categorized makes it easier to sort and search. If you don’t have this data in your old system, this step may involve creating metadata (such as blog post tags).

Assuming that you’re moving to a headless CMS with a microservices architecture, you may also need to adjust the metadata format or change how it’s handled to be compatible with the new system. While content relationships may have been hard-coded into the front end of your previous monolithic solution (for example), your new system will require content metadata stored in the back end with the content so that it can be accessed by any front-end interface you end up implementing.

Create and test scripts

Once you’ve got plans for your content and all of the relevant metadata, it’s time to build your migration scripts and test them. The testing step is critical because things don’t always go smoothly on the first try. It’s much less stressful if your migration script mangles a single blog post in a test environment rather than having it mangle your entire content library on production!

Migrate and review

Once you’re confident the scripts are working as intended (or you’re ready to start copy-pasting, if you’re going that route), it’s time to run them on your production site and make the system changeover. Ideally, this should be a relatively quick and painless process.

Regardless of how well your script testing went in the previous step, it’s still important to give all of your content a thorough inspection after the migration to ensure that everything has gone according to plan.

Impact of Migration

With proper forethought and planning, the impact of migration on your site’s functionality should be limited. Depending on your setup, you may be able to keep the old system running in one cloud instance while executing the migration in another and then killing the old instance and promoting the new one once the migration has been completed. This type of approach can result in migration with no perceptible impact on the user.

In some cases, however, it may be simpler just to migrate by taking the system offline during the changeover. This, too, can have a minimal impact on the user experience if it’s scheduled during user downtime and announced ahead of time so that users are aware and expecting a bit of maintenance downtime.

Migrations can undoubtedly be stressful for your development team, but in the long run, migrating to a more flexible and capable microservices architecture provides advantages for everyone.

Challenges of the Migration Process and How to Overcome Them

Common challenges you may encounter during this process include:

- “Dirty” or messy data

- Data that must be transformed into a different format to work with the new CMS

- Poor or overly complex data storage structure

In general, these challenges can be overcome with engineering, although it may require a bit of extra developer time if (for example) your team needs to scrape data out of HTML files because it’s not easily accessible elsewhere in a database.

But the more significant problems that typically arise with migrations are more easily avoidable. If you define a clear content strategy, test thoroughly before migrating, and ensure that everyone on the team is on the same page about the migration, you should be in good shape!

AgilityCMS: a CMS with Microservices Architecture

If you’re looking for a cloud-native headless CMS built from the ground up with microservices architecture, check out AgilityCMS. It combines a headless platform's fast and flexible features with easy integration of popular authoring tools. Request a demo today!